【Release date】: 2022-1-27

【News source】: udn.com (聯合新聞網)

http://web.ee.nthu.edu.tw/p/406-1175-222331,r2471.php?Lang=zh-tw

National Tsing Hua University's cross-disciplinary integration of electrical engineering and life science team has developed a bionic vision technology that teaches drones, robots, and self-driving cars to "see" like insects, and created an optic nerve-like AI smart chip. In the future, a low-power chip and a lens will be sufficient to identify, track and avoid obstacles during high-speed travel.

.jpg)

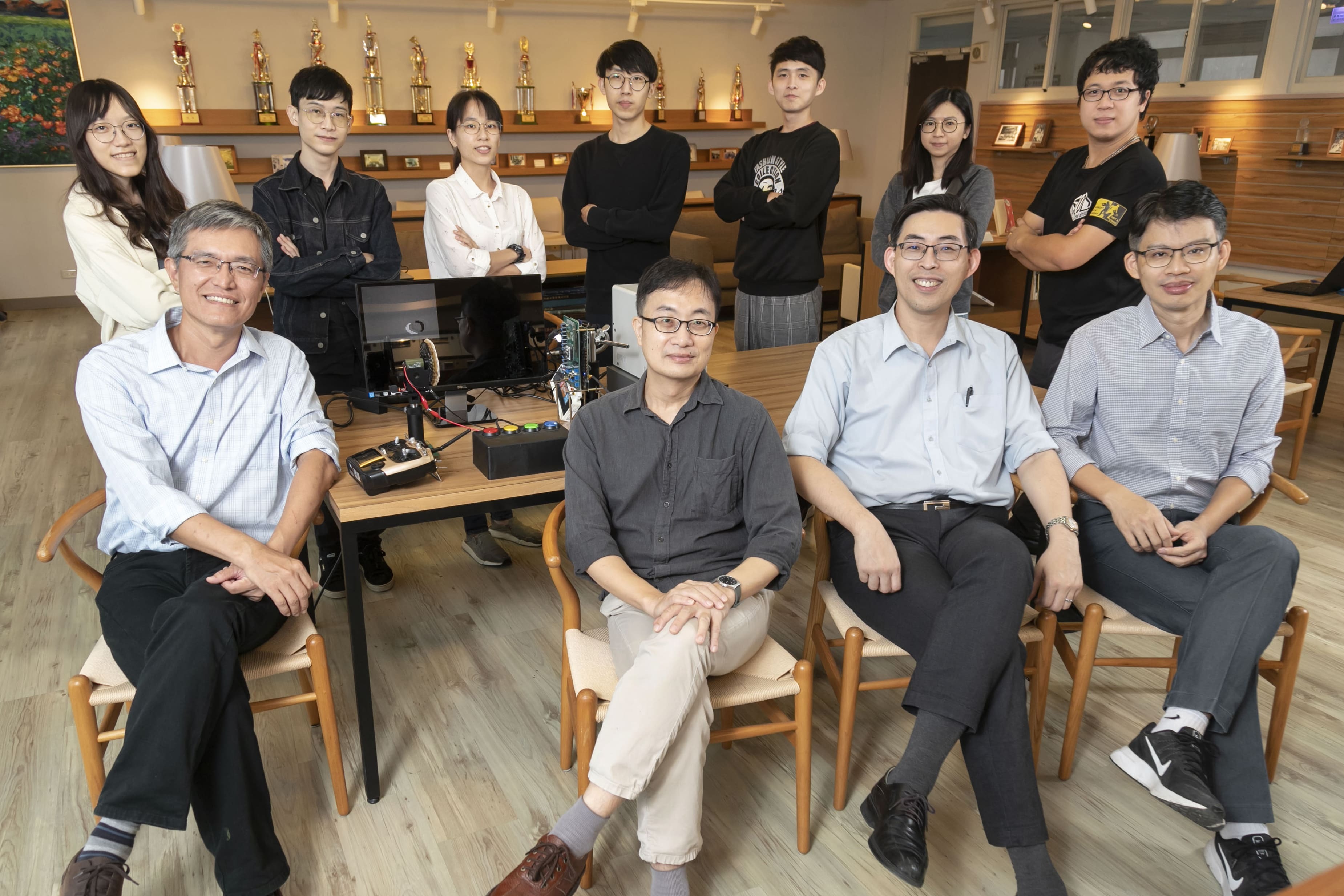

The cross-disciplinary bionic vision team of NTHU consisted of professors from EE: Tang Kea-Tiong (first from right), Hsieh Chih-Cheng (second from left), Liu Ren-Shuo (first from left); and Professor Lo Chung-Chuan from the Department of Life Science. Photo/Provided by NTHU

The research results of the bionic vision team have been published in numerous top international journals, such as Nature Electronics and IEEE Journal of Solid-State Circuits (JSSC). Moreover, the team has also achieved the Ministry of Science and Technology's 2021 Future Technology Award.

Prof. Tang Kea-Tiong, the host of this large-scale research project, pointed out that the current "computer dynamic vision" is like making a movie. It shoots and stores still images, then calculates the difference between image frames. The process is slow and consumes a lot of power and memory. When applied to drones, the drones will not fly fast or far.

In order to achieve a breakthrough, the NTHU EE team invited Prof. Lo Chung-Chuan from the Department of Life Science to cooperate. Prof. Lo Chung-Chuan studies the visual and spatial perception of insects. Inspired by fruit flies and bees, he deduces the "optical flux method", that is, the distance and moving speed of surrounding objects are judged from the light flux, and only the outline and geometric features of the seen scene are captured to identify obstacles and moving objects.

Lo Chung-Chuan said, it's like walking on the road, you don't take too much effort to see and remember all the buildings, signs, character characteristics, license plate numbers in front of you, you just use a little attention to see if there is a car-shaped or human-shaped object approaching quickly. The new technology allows the camera to focus only on the "changes" in the picture. If someone waves their hands or a ball flies over, only the necessary data is captured, hence speeding up image processing and reducing power consumption.

Tang Kea-Tiong also let the computer algorithm learn from the biological brain neural network, which is the so-called "pruning" and "sparsing". The unimportant part of the calculation weight is set to zero and ignored. The more zeros, the better, faster, and less power-consuming the calculation. The team has also made a major breakthrough by allowing the calculations to be done directly in the memory, without having to move data back and forth between the memory and the CPU for calculation and storage, which can save 90% of power consumption and time.

Hsieh Chih-Cheng is responsible for the development of in-sensor computing, such as applications in smart surveillance or access control systems. Only a simple camera is needed to determine whether someone appears on the screen, or an irrelevant dog or cat. Once the camera determines that someone is there, it will send the face data to the computer's recognition system to compare who the person is. Since there is no need to analyze all the information of each frame in detail, only a few microwatts of electricity are enough, which is about one-millionth of the electricity consumption of a light bulb.

Liu Ren-Shuo is an architectural designer of neural-like smart chips that integrates software and hardware. He works with his team and cooperates with domestic IC design leaders and TSMC to develop a new generation of AI chips. He said that key master chips for drones are currently being developed to build the necessary autonomous technology for bionic drones.

NTHU's interdisciplinary bionic vision team develops low-power drone technology that mimics biological vision. Photo/Provided by NTHU

This cross-disciplinary team composed of professors of life science and electrical engineering has been working for 4 years and has made many important breakthroughs, but looking back to the beginning of the group, the team actually took a lot of time to break in. Lo Chung-Chuan, the one responsible for biological sciences, often talks about how clever a certain function of fruit flies is, and the professor of electrical engineering always asks, "How many bits does this calculation take?" Lo Chung-Chuan couldn't help but laugh, "Why is it so difficult to become electrical engineering that is so natural and simple to biology?"

But Lo Chung-Chuan's thoughts have gradually infected the professor of electrical engineering who originally only think about how to do everything. In the future, the team plans to use new technologies to create "search and rescue bees" that are smaller than drones. When disasters such as earthquakes occur, they can travel through dangerous situations to save people in time; and even develop smaller drones that are indetectable.